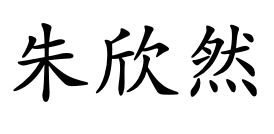

Xinran Zhu

pronounce?

pronounce?

I am a final-year Ph.D. candidate in Applied Math at Cornell University, advised by David Bindel. Before I came to Cornell, I received my bachelor’s degree from Shanghai Jiao Tong University (Zhiyuan College). My research interest lies in numerical analysis of kernel methods.

Publications

- NeurIPSVariational Gaussian Processes with Decoupled ConditionalsIn Advances in Neural Information Processing Systems 2023

- KBSSigOpt Mulch: An Intelligent System for AutoML of Gradient Boosted TreesKnowledge-Based Systems 2023